We have a client who has engaged with us to solve for an outside integration which has limited ability to adpat to their needs. The goal is to make the best of the data they are able to provide and streamline and optimize form the Salesforce side of the fence.

This outside integrator provides a stream of events, these events represent customer data interactions from brick and mortar locations. The exact how on the store side is not relevant to this integration. The following events can be expected to come through the eventstream:

- customer.entered.store

- customer.shopping

- customer.checked.out

- customer.exited.store

These events will only ever be passed for customers who have registered an in store account. The goal is to utilize these events to reflect a live representation of a customer journey in store. This data will be utilized to identify sales patterns such as frequency, brands, TIS (Time In Store) etc.

Phase One: Integration Assessment

The first step in any successful integration is asking the right questions to gather as much information as possible. Having detailed information up front is invaluable in our ability to create the optimal solution for the given integration.

Question One:

Does this third party provide a method to send these events in batches or are they only able to send a single event per request?

Answer One:

There is currently no process to send batch events in a single request. Each request contains a single customer event with only information relevant to that event.

Conclusion:

This simple question and answer provides an immense amount of value to the solution we will end up designing. We now know that any bulkification optimizations will have to be made within Salesforce.

Question Two:

What information is provided within each event payload. Do the various event payloads conform to a standerdized pattern or does each event contains unqiue data? Can an example of each event payload be provided up front to model against?

Answer Two:

The event payload structure is standardized with some unique data points specific to the checked out event which provides details on the products purchased during checkout.

Find a sample of each event payload below.

Code Example

Describe the item or answer the question so that site visitors who are interested get more information. You can emphasize this text with bullets, italics or bold, and add links.

Conclusion:

We now have examples of each possible payload that will pass into Salesforce via this integration. We know the overall payload structure is standerdized. A JSON object containing a series of key value pairs with the following keys always present:

- id: a unique event id

- name: the event name one of the four outlined events

- timestamp: the date/time the event took place

- customer_id: an id represneting the customer that the event is associated with

We should also take note that the customer.checked.out and customer.exited.store events have additional keys associated with them. These contain additional data relevant to the given event type:

- items: contains an array of objects each object represents an item that was purchased during checkout. Each item provides data such as the product id, name, pricing and promotions.

- time_elapsed: provides the time a customer has spent within the store from the time they enter to the time they exit.

Question Three:

Can the integration partner garuntee the sequence of events for a given customer? Is there a possability of events coming over out of order?

Answer Three:

The order of events is managed on the integeration side each event must be sent successfuly before the next event in the sequence will be sent. While not common it is possible in rare cases that a duplicate event sequence is transmitted which could cause duplicate data to be presented in the stream.

Conclusion:

So we don’t have to worry about controlling the sequence of events on the Salesforce side. However we did learn that there is a fringe case of duplicate event streams being sent over which we will probably want to plan for in our final solution.

Question Four:

Given a scenario where an event fails to be ingested by Salesforce is it possible for us to provide some kind of response back to the integration to indicate this failure and cause? Does the integration have a retry mechanism in place for when this situation occurs?

Answer Four:

The Salesforce endpoint should respond with a 500 status code indicating a failure the error message is not specifically utilized by the integration but the 500 will push this event into a queue for retry attempt.

Conclusion:

This is answer gives us the response required to trigger the integrators built in retry mechanism and based on our previous question we know that events will only ever be pushed in sequence meanaing if an event is put into the retry queue events that would follow will not be processed until the retry has completed successfully.

Phase Two: Solution Design

How will data get into Salesforce?

Implementing a REST endpoint in Apex, paired with a Connected App, offers a secure, scalable way for external integrations to authenticate and feed data into Salesforce. This setup not only ensures data is efficiently ingested but also leverages Salesforce’s robust security model. Through Connected Apps, external services can authenticate using OAuth, providing a secure method to access Salesforce data, and the Apex REST endpoint allows for tailored processing of incoming data, optimizing how it’s handled within the Salesforce environment.

How can we optimize and bulkify?

When handling single incoming event payloads, directly updating Salesforce records for each can strain system resources. This approach, while manageable transaction-wise, can lead to performance degradation due to the increased load on Salesforce’s database, potentially slowing down operations system-wide. It also heightens the risk of record locking conflicts, where simultaneous updates to the same record or related records can block one another, leading to processing delays. Optimizing how these events are managed within Salesforce is crucial to maintain efficiency and ensure smooth operation, especially under high volumes.

Because the integrator does not provide a means to ingest bulk events we’ll need do our best to optimize as much as possible on the Salesforce side as the events enter the system.

So as was mentioned earlier updating Account records directly as the events come in is not an effective solution. So what we are going to do is generate a custom object for each event. This reduces the workload of the incoming request process to just creating a new object representing this event within Salesforce because each of these custom objects is unique there is no risk of record locking or other concerns we mentioned earlier. We’ll call our new object Customer_Store_Event__c this object will have the following fields:

Standard Fields

Name: String: which will match the event names from the payload

Custom Fields

- CustomerId: String: stores event event customer id

- TimeStamp: DateTime: stores the event timestamp

- EventId: String: store the unique event id

- CheckoutItems: LongText: Stores the raw items Array in the case of a checkout event

- ShoppingTimeElapsed: DateTime: the elapsed shopping time provided in th case of a store exit event

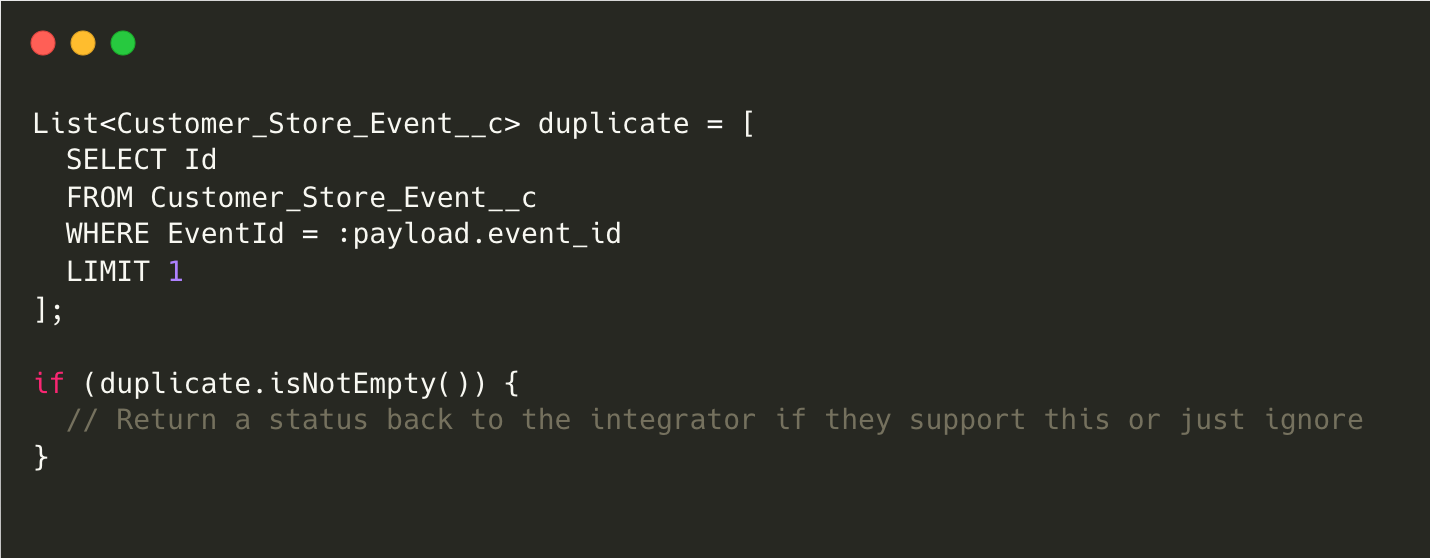

How do we deal with fringe duplication possibility?

So now that we have an effective pattern to ingest these events and store them in Salesforce for future bulk processing we want to remember to account for the possibility of duplicate events. Remember this detail was revealed to us in the answert to question three as a possible situation in some rare cases. Luckily this is simple enough to accomplish in our REST endpoint. We know that we have a unqiue event id provided on each payload and the event id is stored on our newly created custom objects. So with a simple query we can effectively filter out incoming events that match an existing event id within Salesforce

Example Code

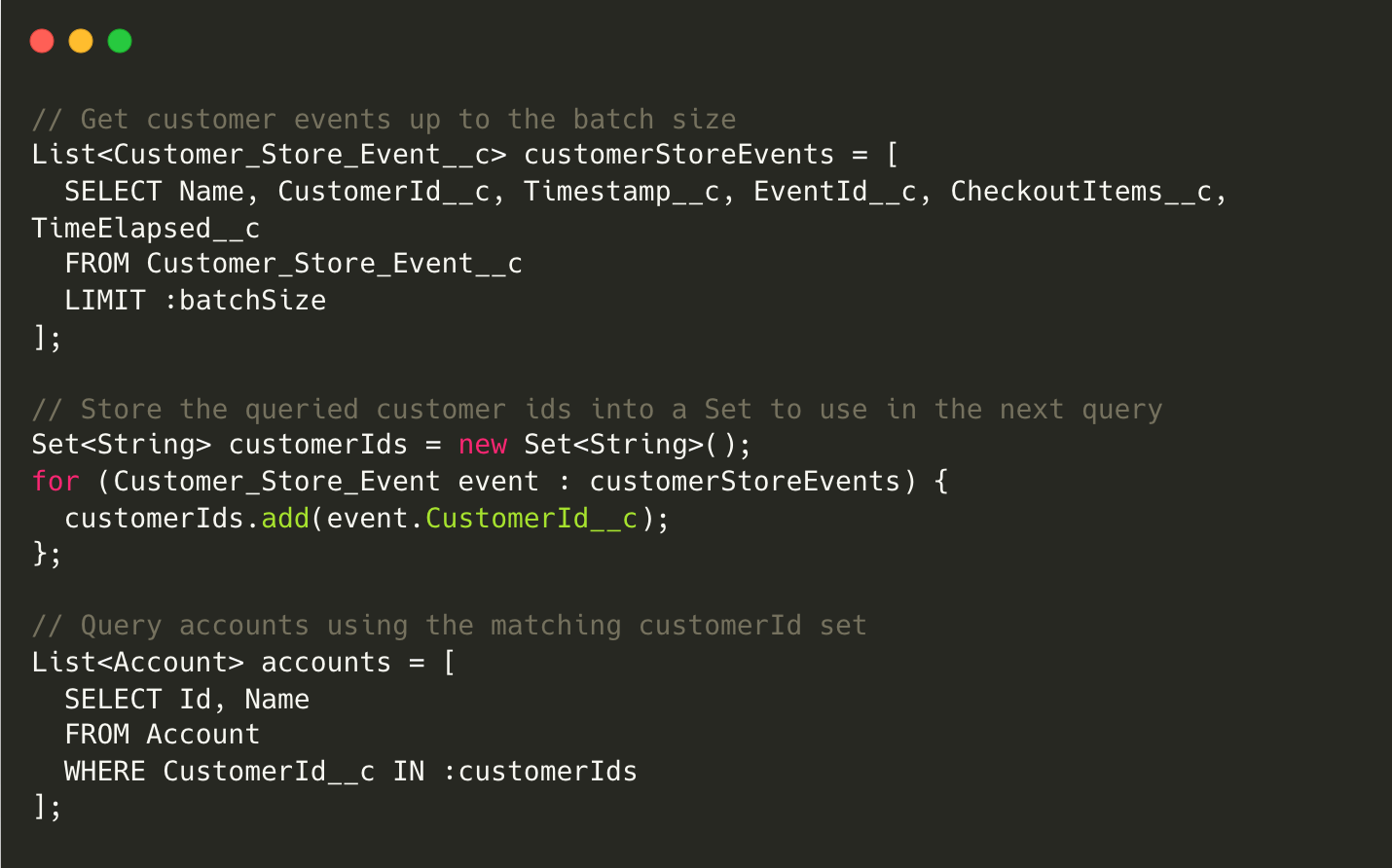

What is our primary processing mechanism

Now that we have these custom objects and they contain our customer in store event data we have a couple different questions we need to answer related to processing and utilizing this data.

By what means do we want to process these objects?

To optimize the processing of customer event data, our solution will leverages Queueable and Schedulable Apex. This method was selected over batch jobs or other options for its enhanced control over execution, allowing for dynamic adjustment to processing loads and the ability to chain jobs for complex workflows. This strategic choice ensures efficient data handling, maintaining system performance, and providing timely customer behavior insights.

At what interval do we need to process these event objects?

This process will run every 10 minutes in an attempt to balance near real time data representation in Salesforce with effective usage of asynchronous resources. Once the solution has been tested further we can tweak this cadance as needed based on traffic and available resources.

Where is this data being processed to?

With this event data being tied to registered in store customers the logical location for these details to be stored is on the associated Account object. To accomplish this we’ll need to make sure that the external customer ids are present in Salesforce if these ids are not available at the Account level in Salesforce already then we will need to work with the client to import these into Salesforce. For the purpose of this example our client has alrwady maintained these ids on the Account object in a custom filed called In_Store_Customer_Id__c. Now as process these events in our queuable context we can easily query for the matching customer Account

Example Code

The previous code example is not fully flushed out and makes some assumptions for the puproses of this article I am representing enough to present the high level details.

Phase Three: Solution Review

After implementing the REST endpoint in Apex and setting up our custom object, Customer_Store_Event__c, to capture the stream of events from the integration partner, it’s time to reflect on the efficacy of our solution and its alignment with the project’s goals.

Key Takeaways from Implementation:

Efficient Data Ingestion: Our approach to creating a custom object for each event has streamlined the process of data ingestion into Salesforce. This method has effectively bypassed potential bottlenecks associated with direct updates to Account records, demonstrating a high degree of efficiency in handling incoming data.

Optimization and Bulkification: By leveraging Queueable and Schedulable Apex for processing the custom event objects, we’ve introduced a scalable solution that adjusts to processing loads dynamically. This choice has proven crucial in maintaining system performance while ensuring data is processed and utilized effectively.

Fringe Duplication Handling: The strategy to query for existing event IDs before processing new events has effectively mitigated the risk of duplicating data due to the integration partner’s rare sequence duplications. This preventive measure has preserved the integrity of our data, ensuring that our Salesforce environment reflects accurate customer interactions.

Data Processing to Account Records: The logical step of associating event data with customer Account records based on in-store customer IDs has laid the groundwork for insightful analytics on customer behavior. This connection not only enriches customer profiles but also opens avenues for advanced analysis and personalized engagement strategies.

Areas for Further Review and Adjustment:

Processing Interval Evaluation: While the decision to process event objects every 10 minutes strikes a balance between real-time data representation and resource optimization, ongoing monitoring is essential. Adjustments may be necessary as we gather more data on system load and event volume fluctuations.

Data Representation and Utilization: The ultimate success of this integration hinges on how the processed data informs business strategies and customer engagement. Further review is needed to ensure that the insights derived from the event data are actionable and align with the client’s objectives. This includes evaluating the dashboard and reporting mechanisms in place to surface these insights.

Handling Data Processing Issues: As we take ownership of the data from the event stream, developing a robust mechanism to handle processing errors becomes paramount. This includes setting up alerts for failed processing attempts and creating a clear workflow for addressing these issues to minimize impact on data quality and availability.

Final Thoughts:

Our journey to integrate and utilize the event stream from the client’s brick-and-mortar locations into Salesforce has been both challenging and enlightening. As we move into the final review phase, it’s crucial to engage with the client for feedback, assess the system’s performance under real-world conditions, and remain flexible to iterate on our solution. The goal has always been to not just capture data but to transform it into meaningful, actionable insights that empower our client to make informed decisions and enhance the customer experience.

By maintaining a focus on scalability, data integrity, and actionable analytics, we are well on our way to realizing the transformative potential of this Salesforce integration, setting a new standard for customer journey analysis in physical retail spaces.

Looking Ahead:

The success of this project will be measured not just by the technical robustness of our solution but by its impact on the client’s business. As we refine our approach and continue to innovate, the lessons learned here will undoubtedly inform future integrations, underscoring our commitment to delivering solutions that not only meet but exceed a clients’ expectations.